Needs Assessment in Continuing Medical Education a Practical Guide

- Research

- Open Access

- Published:

A novel multimodal needs assessment to inform the longitudinal education program for an international interprofessional critical care team

BMC Medical Education volume 22, Article number:540 (2022) Cite this article

Abstract

Background

The current global pandemic has caused unprecedented strain on critical care resources, creating an urgency for global critical care education programs. Learning needs assessment is a core element of designing effective, targeted educational interventions. In theory, multimodal methods are preferred to assess both perceived and unperceived learning needs in diverse, interprofessional groups, but a robust design has rarely been reported. Little is known about the best approach to determine the learning needs of international critical care professionals.

Method

We conducted multimodal learning needs assessment in a pilot group of critical care professionals in China using combined quantitative and qualitative methods. The assessments consisted of three phases: 1) Twenty statements describing essential entrustable professional activities (EPAs) were generated by a panel of critical care education experts using a Delphi method. 2) Eleven Chinese critical care professionals participating in a planned education program were asked to rank-order the statements according to their perceived learning priority using Q methodology. By-person factor analysis was used to study the typology of the opinions, and post-ranking focus group interviews were employed to qualitatively explore participants' reasoning of their rankings. 3) To identify additional unperceived learning needs, daily practice habits were audited using information from medical and nursing records for 3 months.

Results

Factor analysis of the rank-ordered statements revealed three learning need patterns with consensual and divergent opinions. All participants expressed significant interest in further education on organ support and disease management, moderate interest in quality improvement topics, and relatively low interest in communication skills. Interest in learning procedure/resuscitation skills varied. The chart audit revealed suboptimal adherence to several evidence-based practices and under-perceived practice gaps in patient-centered communication, daily assessment of antimicrobial therapy discontinuation, spontaneous breathing trial, and device discontinuation.

Conclusions

We described an effective mixed-methods assessment to determine the learning needs of an international, interprofessional critical care team. The Q survey and focus group interviews prioritized and categorized perceived learning needs. The chart audit identified additional practice gaps that were not identified by the learners. Multimodal methods can be employed in cross-cultural scenarios to customize and better target medical education curricula.

Introduction

Critical care professionals need continuing education to sustain their competence in a broad range of knowledge, skills, and attitudes demanded by subspecialty practice. They face challenges incorporating new evidence-based practices that continue to emerge at a rapid pace. Their education needs are particularly urgent in emerging intensive care settings in economically developing countries [1], prompting the World Health Organization (WHO) and international subspecialty societies to advocate for increased education programs in these areas [2, 3].

To facilitate timely and accurate delivery of best practice delivery in critically ill patients, a group of international critical care physicians and researchers developed the Checklist for Early Recognition and Treatment of Acute Illness and Injury (CERTAIN) program [4, 5], a structured approach to critically ill patients. The CERTAIN study group has provided interprofessional, competency-based training for more than a thousand intensive care physicians and nurses in more than 50 countries, and demonstrated improved adoption of evidence-based best practices in 36 intensive care units in 15 different countries [6, 7]. Based on a longitudinal pilot intervention that demonstrated successful integration of CERTAIN practices in an intensive care unit (ICU) in Bosnia and Herzegovina, the investigators designed a longitudinal education and quality improvement program (Knowledge Translation into Practice, KTIP) targeting an international audience. Our goal is to maximize the impact of this intervention by customizing the curriculum to individual learning needs [8].

There have been considerable efforts over the past decade to strengthen the instructional design, delivery, and outcomes measurement of continuing medical education programs. A well-conducted needs assessment is considered a core contributor to the success of the educational program [9]. Systematic reviews have shown that programs predicated on a well-designed needs assessment are more effective in changing physician behaviors [10, 11]. However, robust learning needs assessment models are rarely reported in the literature, and there is little agreement on how to measure learning needs among international healthcare professionals [12]. This is in part due to the various possible states of self-knowledge commonly described using the Johari window (Supplemental Table 1) [13]. Learning needs within the Johari window framework can be classified as perceived or unperceived. Qualitative methods, such as informal discussions, questionnaires, or structured interviews are often used to invite the learners to express their perceived learning needs. However, learners may remain 'blind' to their unperceived learning needs despite these activities. As Sibley et al. observed, medical practitioners tend to pursue education around topics in which they excel, while avoiding areas in which they are deficient [14]. Quantitative methods, such as chart audits, tests, or direct observation of practice habits, are required to reveal unperceived learning needs (Supplemental Table 2) [15,16,17]. For learning needs assessment to be robust, it should use mixed techniques combining qualitative and quantitative data from a probabilistic sample that includes employees with diverse roles and different skill and experience levels [18]. Unlike previous studies that were predominantly survey-based [12], this study proposed a novel learning needs assessment process using mixed methods and implemented it in a pilot group of international critical care professionals.

First described in 1953 by psychologist and physicist William Stephenson, Q method is a systematic, semi-quantitative study of subjectivity [19]. Different from traditional surveys that provide a summary of opinions, Q method categorizes participants and identifies consensual and divergent opinions within a study population using by-person factor analysis [20, 21]. It has been used frequently in medical settings to identify physicians' and nurses' learning preferences [22,23,24]. In the field of critical care, the learners are often a heterogeneous group of physicians, nurses, and other medical professionals. Thus, Q method was ideal to identify the typology of learners' needs. Using the Q method to divide learners into subgroups, we subsequently conducted focus group interviews with each subgroup to investigate the rationale behind their identified learning priorities, as well as structured chart audits to identify unperceived learning needs. The three combined stages add up to a novel learning needs assessment model that is different from the previously reported single-method models.

Methods

Study subjects

In 2020, the CERTAIN investigators agreed to provide remote, structured longitudinal training using the KTIP program at Shengli (Victory) Oilfield Central Hospital in Dongying, Shandong, China. Dongying is a coastal city with a population of nearly two million. The large public community hospital has 1891 beds, including a mixed 27 bed ICU. Twenty-four critical care professionals participated in the training programs, including 20 physicians and 4 nurses. There were 8 female and 16 male participants. Their demographic features are listed in Table 1. All participants have bachelor's or master's degrees.

Prior to the start of training, a convenience sample of seven physicians and four nurses was selected to participate in a learning needs assessment. The team's daily medical and nursing records were also audited for 3 months to identify unperceived learning needs. Oral consent and written agreement for training activities was obtained from all participants. The study was approved by the Mayo Clinic Institutional Review Board (20–007896) and the Ethics Committee at Shengli Oilfield Central Hospital (Q/ZXYY-ZY-YWB-LL202039).

Multimodal learning needs assessments

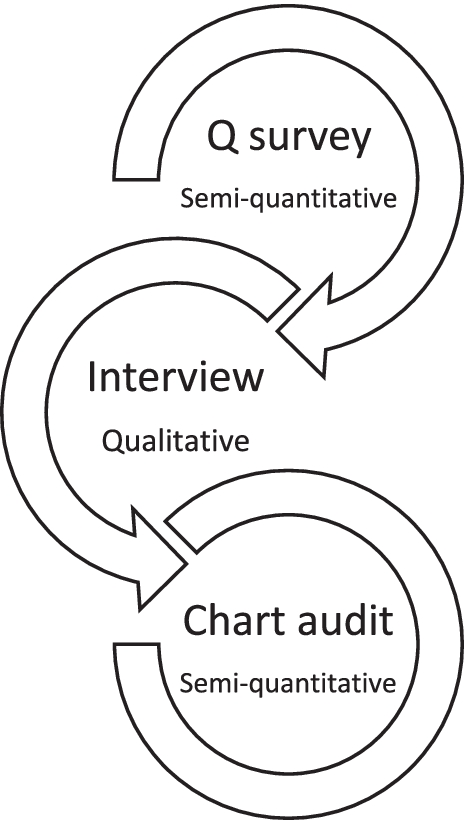

To design a multimodal learning needs assessment process that can be used for diverse international critical care groups, we chose a combination of the Q method survey, focus group interviews, and chart audits as the basis of our learning needs assessment model (Fig. 1).

Structured design of leaning needs assessments

Phase 1. Preparation of Q set

The first step in the Q method was to generate a set of statements (Q set) describing essential critical care performance elements that would be reviewed and ranked by the learners later. The investigators chose to describe these core performance elements in the form of entrustable professional activities (EPAs). EPA is a common conceptual tool in competency-based graduate medical education. Each EPA is an independently executable, observable, and measurable task or responsibility to be entrusted to the unsupervised execution by a trainee once he or she has attained sufficient specific competence [25, 26].

The investigators reviewed and compared published literature on critical care educational objectives and existing critical care curricula, such as American board certification blueprints [27, 28], American critical care training programs(i.e. Fundamentals of Critical Care Support Course, offered by the Society of Critical Care Medicine) [29], Accreditation Council for Graduate Medical Education (ACGME) Reporting Milestones [30], TeamSTEPPS® curriculum [31], and the ACGME Clinical Learning Environment Review Pathways to Excellence [32]. Then the investigators drafted 40 candidate statements using the ACGME Reporting Milestones as a guide to content selection and compared them to published Chinese critical care competency standards [33] to ensure all relevant content domains were considered. Each statement provided the description of a critical care EPA.

The list of EPA statements was next refined and narrowed by a second group of critical care educational experts (n = 5) using Delphi method. These experts were all program directors of critical care fellowship programs. They worked independently from the authors of this study. During the first Delphi round, participants were asked to independently rate each of the 40 EPAs using the question "I would like to include this EPA in a list describing the critical care activities demonstrated at the end of this training program" on a 5-point Likert scale. During the second Delphi round, the same group of participants received the same rating sheet with their individual round one rating, and the distribution of other group members' ratings with calculated mean and median. From there, participants were asked to independently re-rate each EPA for the same question as round one, answering yes/no for each individual EPA to select a total of 20 EPAs to include in the final list. The second round was repeated until a consensus was achieved on a final list of 20 EPAs.

Phase 2.1 Q method survey

The Q method survey was distributed to Chinese participants via an online webpage using HTMLQ. The participants were asked to rank a set of digital cards, each with a single EPA, into 'more important', 'less important', and 'neutral' docks. Then they were asked to place the cards onto a pre-defined grid associated with an anchored scale based on their perceived learning priorities (Supplement Fig. 1). Each participant's ranking pattern was transformed into an array of numerical data according to the grid in which each statement was placed. The statement that was placed at the 'most important' end of the distribution received a score of + 4, the next two statements received + 3, the next two statements received + 2, and so forth, all the way down to the statement that was considered 'least important', which received a score of − 4. Statements placed in the middle of the grid were assigned scores of 0. All participants' arrays of numerical data formed a correlation matrix, from which a set of 'factors' were extracted. Each factor represented a cluster of similar ranking patterns. The factor analysis also identified key consensual or divergent opinions that shaped the patterns of opinions. The data analysis was performed on Ken-Q analysis, a web application of Q methodology (Shawn Banasick, 2019, Version 1.0.6) [34].

Phase 2.2 post survey interview

The factor analysis identified learners who had similar ranking patterns and thus categorized them into subgroups. Focus group interviews with the participants were conducted in their native language, Mandarin Chinese. Participants were invited to review their individual ranking and the common ranking pattern shared by the subgroup. Then the investigator asked them to describe the reasoning behind their ranking choices. The interviews were recorded and transcribed into English for further qualitative review. The transcription was reviewed by two investigators to identify keywords and concepts using a thematic analysis approach.

Phase 3 chart audit

Medical and nursing records were reviewed for learning needs assessment over a three-month consecutive period prior to the start of the KTIP program simultaneously as the participants go through the surveys described in phase 2. All adult patients who were admitted to the ICU for critical illness were included. The charts of the sampled patients were audited at the time of admission, then on day 0, day 1, day 2, day 3, day 7, day 14, day 21, and day 28, if documentation was available on that date. Data were de-identified and documented in a series of care process documentation sheets based on the framework developed by the United States Institute of Health Care Improvement [35].

The investigators focused on metrics that reflected adherence to commonly accepted best critical care and patient-centered care practices. For recommended daily best practices, incidence rates of non-adherence were calculated using the number of observed non-adherence events divided by the total observation days.

Results

Leaning need assessment participant baseline characteristics

Eleven critical care professionals from a mixed medical/surgical/cardiac ICU formed the convenience sample group for the perceived learning need assessment, including 4 nurses and 7 physicians (Table 1). Three participants were male. Their mean age was 38.5 years (standard deviation: 4.9 years). They had 10.9 years (standard deviation: 4.0 years) of clinical critical care experience.

Q method survey

A Q set of 20 EPA statements was generated by critical care education experts after a two-round, one-cycle Delphi process (Table 2). The statements covered five essential domains of critical care practice: organ support and disease management (13 statements), practical skills (2 statements), quality improvement (1 statement), patient-centered care and communication (1 statement), and interprofessional skills (3 statements).

Three subgroups were identified using the Q survey and factor analysis. Each subgroup was represented by a 'factor', a ranking list of the 20 EPAs that reflected the learning priorities expressed by the subgroup. Three participants demonstrated correlation with factor 1 (subgroup 1), five participants correlated with factor 2 (subgroup 2), and three participants (VII, X, XI) correlated with factor 3 (subgroup 3). Seven out of eleven participants' correlation achieved statistical significance (p < 0.05) (Table 1).

All subgroups perceived high interest in several EPAs about organ support and disease management. For example, subgroups 1 and 2 ranked 'Evaluate and manage patients with shock' (statement 6) as the most important learning object, while subgroup 3 valued 'Evaluate and manage perioperative patients' (statement 20) most highly. All subgroups perceived moderate interest in quality improvement, low to moderate interest in interprofessional skills, and low interest in patient-centered communication. The three subgroups had different opinions on learning procedure/resuscitation skills. Subgroup 1 participants were not interested, however, subgroups 2 and 3 were moderately or highly interested (Table 3) in learning procedures or resuscitation skills.

Post survey interviews

The interviews were conducted in three subgroups. Thematic analysis identified a number of consensual or divergent concepts. The associated quotes are listed in Table 4.

- 1)

Overall impression from the ranking activities: eager to learn

Many participants explained that they were interested in all EPAs, including the ones they ranked as less important.

- 2)

Organ support and disease management: strong interest

The learners expressed strong interest in refining their organ support techniques, especially for their sickest patients. Their needs were deeply rooted in their daily practice and extended to nuances of therapeutics. Five participants (I, II, III, V, VI) expressed that they were eager to learn more about Positive End Expiratory Pressure (PEEP) titration for refractory respiratory failure. Advanced life support, liberation from prolonged mechanical ventilation, and nutrition support for the critically ill were mentioned frequently by learners.

- 3)

Quality improvement: moderate interest

Several participants expressed interest in standardizing and protocolizing commonly performed practices. 'Flowcharts' and 'algorithms' were desired. Interest in antibiotic stewardship was expressed by participant I.

- 4)

Interprofessional skills: low to moderate interest

Although structured rounds are conducted daily, the clinicians lacked a template for sharing information on rounds. Some participants shared the need for a communication tool that assists multidisciplinary collaboration.

- 5)

Patient-centered communication: Low interest

The participants explained that patient-centered communication was indeed considered important, but not as important as disease management. Some participants shared that the survey activity reminded them about the importance of patient engagement. Their main concern about conducting international education on patient-centered communication is that it may be less feasible than other educational efforts due to cultural and language barriers and differing cultural norms.

- 6)

Procedure/resuscitation skills: variable interest

The opinions in regard of procedure/resuscitation skills were variable. The experienced physicians expressed confidence in performing procedures. However, the physician in charge of the residency program (participant I) pointed out needs existed among the doctors in training.

Chart audit

We reviewed 101 patient charts during the audit period. Eighty-five patients had baseline information on the day of admission that is summarized in Supplemental Table 3.

One hundred and one patients had care process data available over a total of 436 observed days. Data detailing non-adherence incidence rates are summarized in Table 5. Adherence to deep vein thrombosis (DVT) prophylaxis was high in all patients. We also observed high adherence to oral hygiene, head of bed elevation, peptic ulcer prevention, and sedation discontinuation assessment in mechanically ventilated patients. The documented assessment rates of central line removal, urinary catheter removal, spontaneous breathing trial, and antimicrobial therapy discontinuation were suboptimal. Family discussions were documented infrequently.

Discussion

Although a needs assessment is a well-accepted element of instructional design, the best approach to define the learning needs of an international audience to inform the longitudinal delivery of a virtual critical care curriculum has not been described. In this study, we described a multi-stage, mixed-method learning needs assessment model that is different from any previously reported assessment tool. This approach enabled us to better understand not only the practice but also the cultural context of our learner group. The pilot group expressed strong interest in education on organ support and disease management topics, and moderate interest in quality improvement. Interest in interprofessional communication and patient-centered communication was relatively low, and interest in learning procedure/resuscitation skills was mixed. While chart audit demonstrated a high level of routine completion of many elements of evidence-based daily care, it also identified opportunities for improvement in discontinuation of invasive devices, assessing for spontaneous breathing trial, and antimicrobial therapy discontinuation. Communication with family was another potential underperceived learning need. This learning needs assessment model will enable us to develop a meaningful collaboration more quickly and deliver our virtual international education program more effectively.

Post-graduate critical care education in China is transitioning from a traditional to a competency-based model [33]. The first nationwide agreement about evaluation and accreditation of Chinese critical care trainees was published in 2016, to describe the minimum required competencies for a critical care physician [33]. The list of competencies, consisting of 129 competencies in 11 domains, was determined by a task force summoned by the Chinese College of Intensive and Critical Care Medicine (CCICCM). The list provided guidance to learners, their supervisors, and institutions in teaching and assessment. However, its length and the theoretical language employed present barriers to its direct use in learning needs assessments.

Previously reported learning needs assessments for Chinese medical professionals were predominately survey-based. Guo et al. conducted a 123-item learning needs survey among medical educators in China, aimed at identifying interest in various topics and perceived benefits and barriers of participating in faculty development programs [36]. Most study participants were hospital presidents or deans, potentially limiting the generalizability of these findings to the diverse learning needs of bedside interprofessional teams. The survey-based design could only reflect the responders' perception of their learning preference, leaving unperceived learning needs uninvestigated.

In our study, the multimodal method had several strengths. First, Q method allowed us to study the typology of opinions within a heterogeneous group with a broad range of competencies, while traditional survey-based or test-based learning needs assessments are designed for groups with similar skillsets, such as medical students, nurses, or residents. We created EPAs to translate the theoretical concepts of 'competencies' into the core elements of practice. This list served as the foundation of the Q survey among interprofessional practitioners. Second, the mixed-method design was powerful in creating a complete, unbiased assessment. Chart audit, which is still rarely used for learning needs assessment of medical professionals, is especially useful in identifying unperceived learning needs, increasing awareness of practice weaknesses, and improving learning motivation. For example, chart audit in this study suggested the learners' communication with families may not be adequate, information that could have been missed if the investigators only focused on learner responses. Chart audit also detected a suboptimal documentation rate of antimicrobial therapy discontinuation assessment, highlighting a need for better antimicrobial stewardship reflected by only one member of the learner group. Without the data from the chart audit, her valuable individual opinion could have been overlooked. By gathering subjective and objective data, we made our inferences about learning needs more robust.

The study has several limitations. First, to make the ranking activity convenient and feasible, the Q set only contained 20 EPAs. Recognizing the diverse nature of critical care practice, some learning needs could have been left 'hidden' if not properly described in the Q set. Moreover, although Q methodology is designed to study typology within a population, its semi-quantitative nature often results in multiple solutions of classification, while some opinions remain unclassifiable. Lastly, to maximize remote feasibility and limit cost we used chart audit as a surrogate for direct observation of participant daily clinical practices. This could have led to an underestimation of actual adherence to best practice based on documentation habits in the medical record.

Conclusion

Multimodal learning needs assessment is feasible in interprofessional critical care groups and can be conducted remotely. Our methods identified our learners' needs in various domains and effectively differentiated both divergent perceived and unperceived learning needs important for planning our educational intervention. These structured, yet flexible methods offer important tools to facilitate acceptance, engagement, and adoption of our customized critical care curriculum within the complex context of our learners' practice environment. Our findings also indicated that addressing communication with patients and families may be challenging because of the difference in expectations and cultural norms. These results will help the investigators design an education program that includes both case-based discussions that provide a clinical context for discussions on common diagnostic and therapeutic challenges encountered in critical care, and remote simulation experiences to introduce a structured multidisciplinary rounding format to remind bedside teams to assess the necessity of devices and antimicrobial therapy. We are also partnering with our Chinese colleagues to better understand the best approach to discussions with families within their cultural and clinical context.

Availability of data and materials

The data used for this research are available from the corresponding author on reasonable request and are subject to Institutional Review Board guidelines.

Abbreviations

- EPA:

-

Entrustable Professional Activity

- WHO:

-

World Health Organization

- CERTAIN:

-

Checklist for Early Recognition and Treatment of Acute Illness and Injury

- ICU:

-

Intensive Care Unit

- KTIP:

-

Knowledge Translation into Practice

- ACGME:

-

Accreditation Council for Graduate Medical Education

- PEEP:

-

Positive End Expiratory Pressure

- DVT:

-

Deep Vein Thrombosis

- CCICCM:

-

Chinese College of Intensive and Critical Care Medicine

References

-

Fowler RA, Adhikari NK, Bhagwanjee S. Clinical review: critical care in the global context–disparities in burden of illness, access, and economics. Crit Care. 2008;12(5):1–6.

-

Geiling J, Burkle FM Jr, Amundson D, Dominguez-Cherit G, Gomersall CD, Lim ML, et al. Resource-poor settings: infrastructure and capacity building. Chest. 2014;146(4):e156S–e67S.

-

World Health Organization. Health Economics: cost effectiveness and strategic planning (WHO-CHOICE). [Available from: https://www.who.int/choice/cost-effectiveness/en/.

-

Vukoja M, Kashyap R, Gavrilovic S, Dong Y, Kilickaya O, Gajic O. Checklist for early recognition and treatment of acute illness: international collaboration to improve critical care practice. World J Crit Care Med. 2015;4(1):55–61.

-

Barwise A, Garcia-Arguello L, Dong Y, Hulyalkar M, Vukoja M, Schultz MJ, et al. Checklist for early recognition and treatment of acute illness (CERTAIN): evolution of a content management system for point-of-care clinical decision support. BMC Med Info Decis Mak. 2016;16(1):1–10.

-

Min S, Kashyap R, Arguello LG, Kaur H, Hulyalkar M, Barwise A, et al. Remote simulation training with Certain checklist in 11 countries. Crit Care Med. 2015;43(12):1485431.

-

Vukoja M, Dong Y, Hache-Marliere M, Adhikari N, Schultz M, Arabi Y, et al. 38: CERTAIN: an international quality improvement study in the intensive care unit. Crit Care Med. 2019;47(1):19.

-

Kovacevic P, Dragic S, Kovacevic T, Momcicevic D, Festic E, Kashyap R, et al. Impact of weekly case-based tele-education on quality of care in a limited resource medical intensive care unit. Crit Care. 2019;23(1):220.

-

Laxdal O. Needs assessment in continuing medical education: a practical guide. J Med Educ. 1982;57(11):827–34.

-

Fox RD, Bennett NL. Continuing medical education: learning and change: implications for continuing medical education. BMJ. 1998;316(7129):466.

-

Davis N, Davis D, Bloch R. Continuing medical education: AMEE education guide no 35. Med Teach. 2008;30(7):652–66.

-

Ferreira RR, Abbad G. Training needs assessment: where we are and where we should go. BAR-Brazilian Admin Rev. 2013;10(1):77–99.

-

Ingham H, Luft J. The Johari window: a graphic model for interpersonal relations. Los Angeles: Proceedings of the western training laboratory in group development; 1955.

-

Sibley JC, Sackett DL, Neufeld V, Gerrard B, Rudnick KV, Fraser W. A randomized trial of continuing medical education. N Engl J Med. 1982;306(9):511–5.

-

Ratnapalan S, Hilliard RI. Needs assessment in postgraduate medical education: a review. Med Educ Online. 2002;7(1):4542.

-

Thomas PA, Kern DE, Hughes MT, Chen BY. Curriculum development for medical education: a six-step approach. Baltimore: Johns Hopkins University Press; 2016.

-

Hauer J, Quill T. Educational needs assessment, development of learning objectives, and choosing a teaching approach. J Palliat Med. 2011;14(4):503–8.

-

While A, Ullman R, Forbes A. Development and validation of a learning needs assessment scale: a continuing professional education tool for multiple sclerosis specialist nurses. J Clin Nurs. 2007;16(6):1099–108.

-

Stephenson W. Notes from the study of behavior. Chicago: The University of Chicago Press; 1953.

-

Gaebler-Uhing C. Q-methodology: a systematic approach to assessing learners in palliative care education. J Palliat Med. 2003;6(3):438–42.

-

Watts S, Stenner P. Doing Q methodology: theory, method and interpretation. Qual Res Psychol. 2005;2(1):67–91.

-

Barbosa JC, Willoughby P, Rosenberg CA, Mrtek RG. Statistical methodology: VII. Q-methodology, a structural analytic approach to medical subjectivity. Acad Emerg Med. 1998;5(10):1032–40.

-

Valenta AL, Wigger U. Q-methodology: definition and application in health care informatics. J Am Med Inform Assoc. 1997;4(6):501–10.

-

Barker JH. Q-methodology: an alternative approach to research in nurse education. Nurse Educ Today. 2008;28(8):917–25.

-

Ten Cate O. Nuts and bolts of entrustable professional activities. J Grad Med Educ. 2013;5(1):157–8.

-

Ten Cate O. A primer on entrustable professional activities. Korean J Med Educ. 2018;30(1):1–10.

-

American Board of Internal Medicine. Critical Care Medicine Certification Examination Blueprint 2020 [Available from: https://www.abim.org/~/media/ABIM%20Public/Files/pdf/exam-blueprints/certification/critical-care-medicine.pdf.

-

Fessler HE, Addrizzo-Harris D, Beck JM, Buckley JD, Pastores SM, Piquette CA, et al. Entrustable professional activities and curricular milestones for fellowship training in pulmonary and critical care medicine: report of a multisociety working group. Chest. 2014;146(3):813–34.

-

Society of Critical Care Medicine. Fundamental Critical Care Support Courses 2020 [Available from: https://www.sccm.org/Fundamentals.

-

The accreditation Council for Graduate Medical Education. The internal medicine milestone project. 2020.

-

Quality AoHRa. TeamSTEPPS. 2020.

-

Accreditation Council for Graduate Medical Education. Clinical learning environment Review. 2020.

-

Hu X, Xi X, Ma P, Qiu H, Yu K, Tang Y, et al. Consensus development of core competencies in intensive and critical care medicine training in China. Crit Care. 2016;20(1):330.

-

Banasick S. Ken-Q Analysis, A Web Application for Q Methodology. 2019.

-

Institute for Healthcare Improment. 2020.

-

Guo Y, Sippola E, Feng X, Dong Z, Wang D, Moyer CA, et al. International medical school faculty development: the results of a needs assessment survey among medical educators in China. Adv Health Sci Educ. 2009;14(1):91–102.

Acknowledgments

The authors would like to acknowledge Dr. Can Wang, for the contribution of statistical analysis.

Funding

Mayo Clinic Abroad Small Grants Program (Oct 2019).

Author information

Authors and Affiliations

Contributions

Concept and design: AN, OG, and YD; Protocol development: AN, YD, YS and HL; Data retrieval and statistical analysis: WC, YS, AB, and HL; Drafting of the manuscript: HL; Critical revision of the manuscript: QY, AB, LQ, AT, and AN; Approval of the final draft: All the authors.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Informed consent was obtained from all participants All methods were carried out in accordance with relevant guidelines and regulations. The study was approved by the Mayo Clinic Institutional Review Board (20–007896) and the Ethics Committee at Shengli Oilfield Central Hospital (Q/ZXYY-ZY-YWB-LL202039). The Ethics Committee at Shengli Oilfield Central Hospital waived informed consent for the patients whose charts were reviewed with research authorization.

Consent for publication

All authors gave their approval to publish the article.

Consent for publication was obtained from all survey participants.

Consent to publish from patients were waived since there was no identifying images or other personal or clinical details that compromise patients' anonymity.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Supplemental Table 1.

Johari window: different states of learning needs. Supplemental Table 2. Examples of learning needs assessment tools. Supplemental Fig. 1. Q survey, a ranking activity to express learning priorities. Supplemental Table 3. Patient baseline information on the day of admission.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and Permissions

About this article

Cite this article

Li, H., Sun, Y., Barwise, A. et al. A novel multimodal needs assessment to inform the longitudinal education program for an international interprofessional critical care team. BMC Med Educ 22, 540 (2022). https://doi.org/10.1186/s12909-022-03605-2

-

Received:

-

Accepted:

-

Published:

-

DOI : https://doi.org/10.1186/s12909-022-03605-2

Keywords

- Medical continuing education

- Medical training

- Learning needs assessment

- Critical care

- Intensive care

- Entrustable professional activity

- Curricular milestones

- Delphi

- Q method

Source: https://bmcmededuc.biomedcentral.com/articles/10.1186/s12909-022-03605-2

Publicar un comentario for "Needs Assessment in Continuing Medical Education a Practical Guide"